1. Why Rust is transforming AI development

The AI landscape is shifting dramatically. While Python has dominated machine learning for years, Rust is emerging as the language of choice for production-grade AI systems. At Plutenium, we've seen this transformation firsthand teams are prototyping in Python but deploying with Rust.

The reason is simple: modern AI demands speed, safety, and scalability. Rust delivers all three without compromise. Major tech companies like Microsoft, Google, and Meta have announced significant Rust initiatives for their AI infrastructure. Even Elon Musk's xAI uses Rust for all its AI infrastructure a powerful signal of where the industry is headed.

Key advantages driving adoption:

- Memory safety without garbage collection - No more runtime crashes or memory leaks

- Zero-cost abstractions - High-level code that compiles to efficient machine code

- Fearless concurrency - True parallel processing across all CPU cores

- Production reliability - Catch bugs at compile time, not in production

2. Performance advantages over Python

Speed matters in AI. Training models, running inference, and processing massive datasets all demand maximum performance. This is where Rust shines brightest.

Unlike Python, which relies on interpreters and external libraries for speed, Rust compiles directly to machine code. The difference is dramatic. Cloudflare's Infire, a custom LLM inference engine written in Rust, delivers up to 7% faster inference than vLLM with lower CPU overhead and better GPU utilization.

Real performance gains:

- Faster execution - Training and inference run at native machine speed

- No GIL limitations - Unlike Python's Global Interpreter Lock, Rust enables true multi-threading

- Predictable latency - Critical for real-time AI applications like fraud detection

- Efficient memory usage - Deploy on edge devices with limited resources

"Rust for Python extensions grew 22% year-over-year, showing how teams combine Python's ergonomics with Rust's performance in production."

The hybrid approach is gaining momentum: prototype quickly in Python, then optimize performance-critical components in Rust. Libraries like PyO3 make this integration seamless, letting you write Rust code that works as a Python package.

3. Top Rust AI frameworks you should know

The Rust AI ecosystem has matured rapidly in 2025. Here are the frameworks leading the charge:

Candle - Hugging Face's Rust ML Framework

Built specifically for inference, not training. Candle excels in serverless and edge environments where low latency and small binaries are essential. Key features include minimal overhead, WebAssembly support for browser-based AI, and optimized edge computing performance.

tch-rs - PyTorch Bindings for Rust

Leverage PyTorch's mature ecosystem with Rust's performance benefits. Perfect for teams already invested in PyTorch who want production-grade speed.

Linfa - Rust's Answer to Scikit-Learn

Comprehensive machine learning toolkit covering classification, regression, clustering, and more. Familiar API for Python developers making the transition.

Burn - Flexible Deep Learning Framework

Focuses on modular design and research experimentation. Ideal for teams building custom neural network architectures.

ndarray - Safe Multi-Dimensional Arrays

The foundation for numerical computing in Rust. Efficient, type-safe operations on large datasets.

Some resources to explore these frameworks:

- Official Rust documentation - Start with "The Rust Programming Language" book

- Candle tutorials - Hands-on examples from Hugging Face

- PyO3 documentation - Master Python-Rust integration

- GitHub showcases - Real-world projects demonstrating Rust AI applications

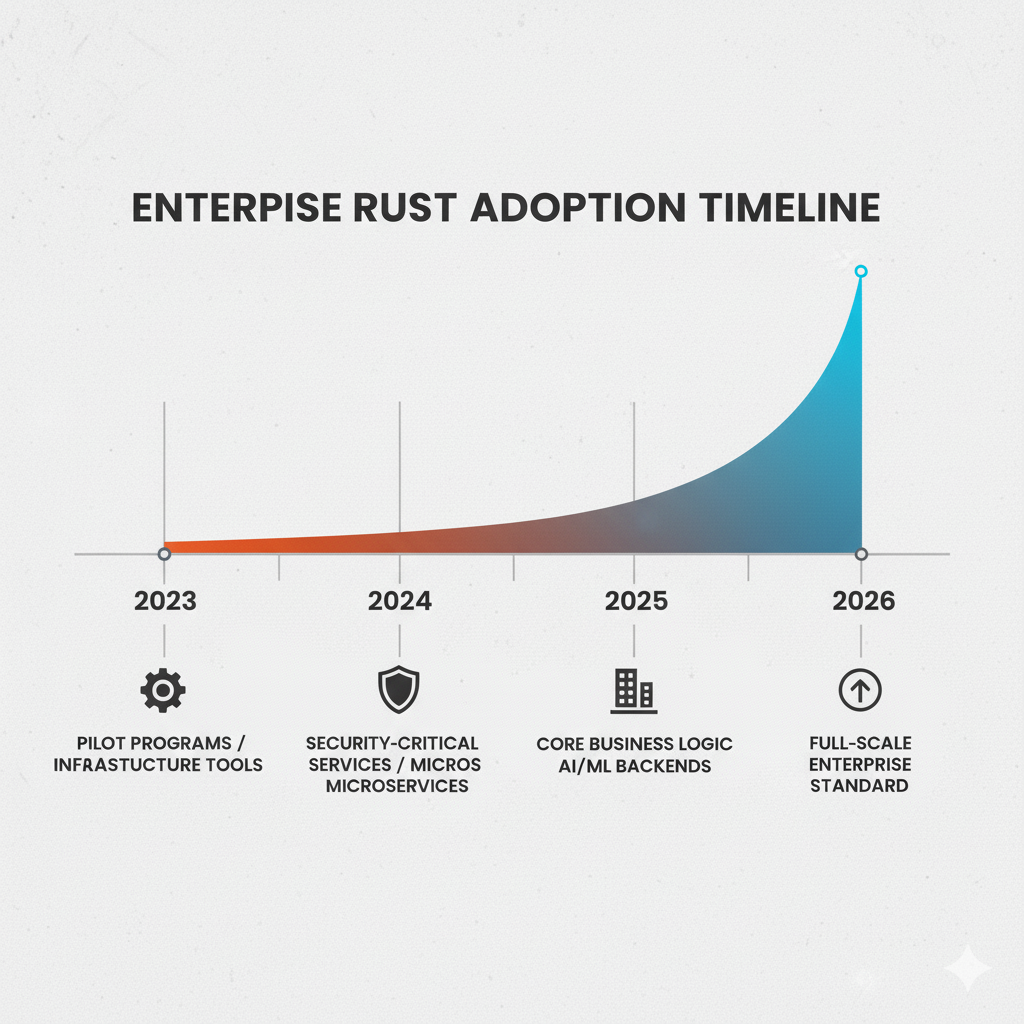

4. Real-world enterprise adoption stories

Enterprise adoption tells the real story. Fortune 500 companies are betting big on Rust for AI infrastructure, and the results speak for themselves.

Cloudflare - Production LLM Infrastructure

Cloudflare developed Infire in Rust to power Llama 3.1 8B across their global edge network. The result: faster inference, lower CPU overhead, and more efficient GPU utilization than competing solutions.

Hugging Face - Critical Performance Components

The Rust tokenizers library ensures blazing-fast text tokenization in NLP pipelines. When millions of tokens need processing per second, Rust's performance becomes non-negotiable.

Tech Giants Leading Migration

Microsoft, Google, Meta, and Amazon have all announced major Rust initiatives for core AI infrastructure. This validation from industry leaders has reduced perceived risk for organizations considering Rust adoption.

"In 2025 Rust has carved out a clear role in production AI workloads demanding predictable performance, tight memory control, and reliability at scale."

The pattern is clear: teams prototype in Python for speed, then deploy with Rust for performance and reliability. This isn't a complete replacement it's strategic optimization where it matters most.

5. When to choose Rust for your AI project

Choosing the right technology stack is critical. Here's when Rust makes the most sense for AI development:

✓ Production-Grade AI Systems

When reliability, performance, and uptime are non-negotiable. Banking, healthcare, and critical infrastructure demand Rust's safety guarantees.

✓ Edge AI and IoT

Deploy AI models on resource-constrained devices with predictable performance. Smart cameras, wearables, and industrial sensors benefit from Rust's efficiency.

✓ Real-Time Inference

Applications requiring millisecond-level response times. Trading systems, fraud detection, and autonomous vehicles can't afford Python's latency.

✓ Large-Scale Data Processing

ETL pipelines and data preprocessing for massive datasets. Rust's parallel processing capabilities deliver near-linear scaling with core count.

✓ AI Infrastructure

Building the underlying systems that power AI applications. Load balancers, model servers, and orchestration layers benefit from Rust's reliability.

Consider carefully before choosing Rust:

While Rust offers compelling advantages, acknowledge the learning curve. Rust requires developers to think differently about memory management and ownership. However, this upfront investment pays dividends in production stability.

For rapid experimentation and research, Python remains more accessible. The key is choosing the right tool for each stage of your AI journey and many successful teams use both strategically.

Conclusion: Your next step with Rust AI

The convergence of Rust and AI represents a strategic opportunity for organizations building intelligent systems. The question isn't whether Rust belongs in AI development it's how quickly you can adopt it to gain a competitive edge.

With major tech companies leading the charge and frameworks maturing rapidly, the Rust AI revolution is transforming how we build intelligent systems. The ability to deliver reliable, high-performance AI systems drives adoption, making Rust an essential tool for modern development teams.

At Plutenium, we help startups and enterprises navigate these technology choices to build scalable, secure, and high-performance solutions. Whether you're exploring Rust for AI development, optimizing existing ML pipelines, or building production-grade infrastructure, we're here to guide your journey.

Ready to explore Rust for your AI projects? Contact Plutenium to discuss how we can help you leverage Rust's performance and safety benefits for your machine learning infrastructure.